Read Part 1: What’s Wrong with Weather Data

Hopefully by now, you understand why there’s a problem with how weather is done today. In the following sections, we’ll explain how Tomorrow.io is going about solving this problem.

Three years ago, before Tomorrow.io was even officially founded, we looked at the weather space and quickly understood three things:

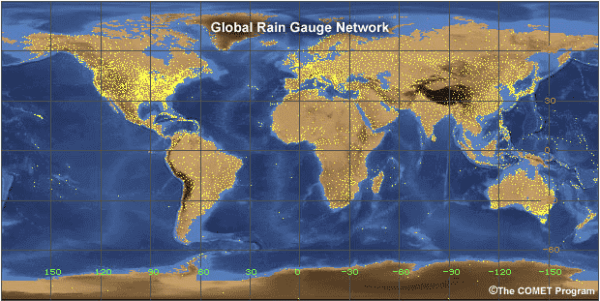

- Weather observations are the “currency” of meteorology – without them, forecasting doesn’t work.

- This currency is in short supply, and badly distributed, often not reaching places where it would do the most good.

- The weather enterprise is stuck, and continuing the path of hardware deployments isn’t going to change that.

Our conclusion? We needed to rethink observations altogether.

Insight: if weather affects nearly everything, then nearly everything is a potential sensor.

You may have seen “weather forecast rocks” in souvenir shops. “Place outside” the directions tell you, “If wet, then there’s rain”. A joke, yes, but it gets at a basic truth. We can use weather’s impacts to see weather better. And certain systems, already deployed, turn out to be extremely powerful sensors.

One of the most crucial is also one of the most widely-built-out technologies in the world: the wireless signals that power cell phone and media communications. If you’re a weather expert, you’ve probably never thought about wireless signals as remotely associated with weather. But if you’re a network engineer, you have nightmares about “rain-fade”, where signal is attenuated during bad precipitation events. Water absorbs and scatters microwave signal energy – so if it’s raining, there are subtle changes to the signal strength, up to a point of complete signal loss in certain conditions (remember your favorite show going to static on satellite TV during a severe thunderstorm?). The same is true for any type of “water” in the air, not just rain. Snow, sleet, ice-pellets, and even humidity, are all discernable if you know what to look for, and how to do it.

Imagine for a second that you had special glasses that could see wireless signals. Without them the world looks normal. Put them on, and you see the thousands of wireless signals traversing all around you. They’re everywhere. Microwave signals passing between thousands of cell towers across the city, supporting millions of devices. Satellite signals beaming down and up from space, hitting every satellite TV receiver in every corner of the planet. Point-to-point networks built for hospitals, financial traders, cloud providers, railways, and many more. There are literally millions of these links across the planet. Most can (and some already do) “sense” the weather.

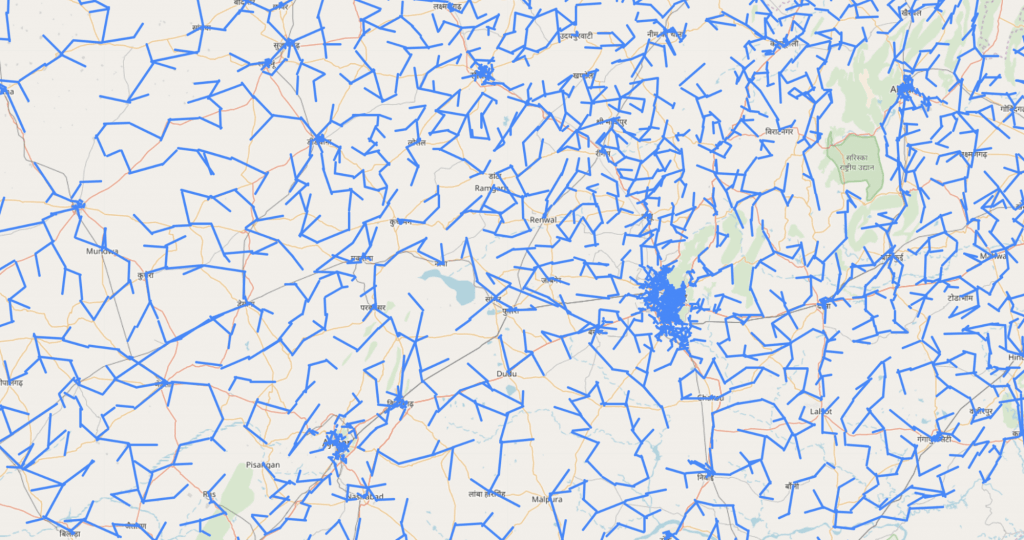

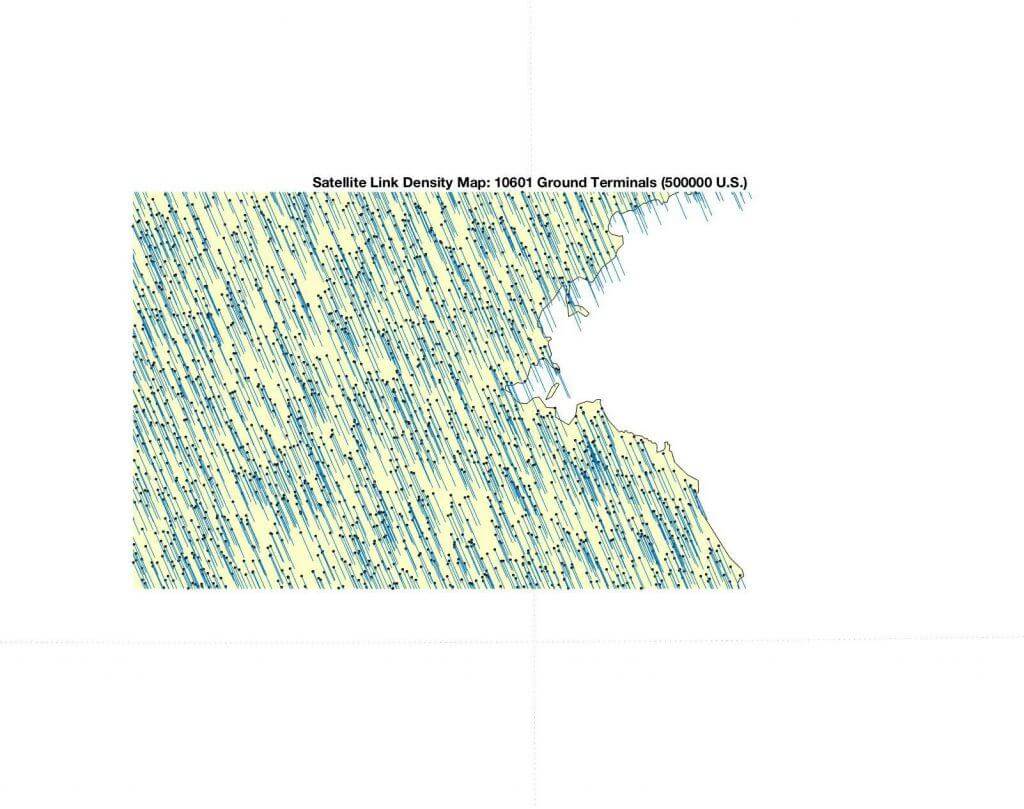

A typical cellular network topology in an area of 3000 km2, covering urban, suburban and rural settlements in India. The exact coordinates of the wireless links are randomized. Source: Tomorrow.io

This is a typical cellular network topology. The blue lines on this map represent microwave links — point-to-point wireless connections between two cellular towers, used to send information back and forth across a cellular network. Most of the public never even thinks about these links, and even specialists see just a communication network.

But we see something completely different. We see a sensing network. If we can see changes in wireless signal strength, we can see the weather. At fantastic resolution. Our “sensors” are already deployed all over the globe, no new hardware required.

Implementing this idea, though, is challenging. The data is owned by a multitude of companies, from cellular carriers to satellite carriers to dozens of other private and governmental network operators. Getting access to, and subsequently extracting the relevant data in compliance with very strict regulations and security standards, is a hell of a task, involving all sorts of IT and architecture complexities. And that’s just to get the data in its raw form, before we even start to talk about the algorithms to turn it from wireless noise into meaningful weather observations.

Three years into this, and Tomorrow.io has data extraction capabilities that are network-agnostic, compliance bullet-proof, secure, and deployed in dozens of networks around the globe with some of the largest operators out there. We’ve developed the algorithms that turn wireless signal measurements into weather data, to a point where we often outperform traditional sensors such as radars, that were built especially for that purpose.

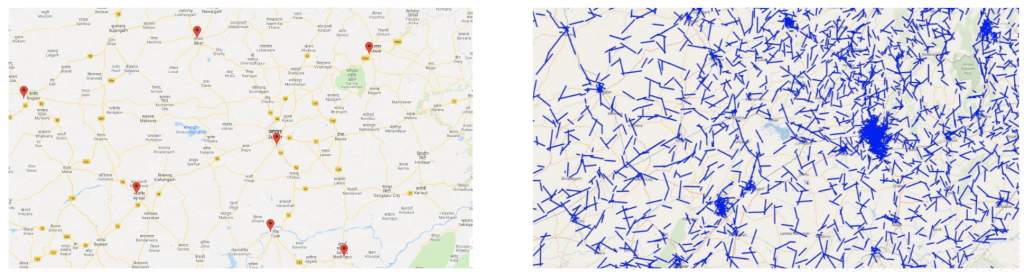

Here is that same network from above, compared with the existing alternative for rain sensing – the Indian Meteorological Department (IMD) network of weather stations:

Government Weather Stations vs. Tomorrow.io’s Virtual Sensors

(Left) Seven public weather stations covering an area of ~3000 km2(Right) The same area with thousands of Tomorrow.io microwave links, each a weather sensing point.Source: Tomorrow.io

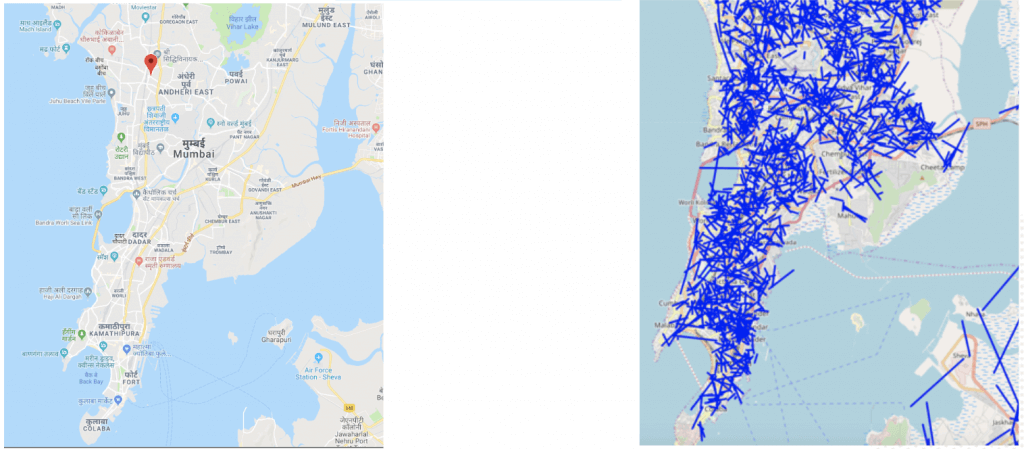

And here is that same comparison, weather stations versus cell towers, focused on Mumbai, a city of 19 million people.

Government Weather Stations vs. Tomorrow.io’s Virtual Sensors

(Left) One public weather station in the entire city of Mumbai (at the airport) (Right) The same area with thousands of Tomorrow.io microwave links, each a weather sensing point.

There’s even more coming from the sky!

Simulated density map of satellite-to-ground terminal links operating at C, Ku and Ka bands (most sensitive to weather), across the eastern part of Massachusetts. Real-life distribution is obviously not as uniform, but nevertheless useful for sensing. Source: Tomorrow.io

IMPORTANT NOTE ABOUT DATA PRIVACY:

Before getting into the powerful insights we gain by using virtual sensors, we want to highlight insights we don’t gain:

WE NEVER GET EXPOSED TO ANY PRIVATE DATA. EVER

We don’t need it. We don’t want it. By design, we do not get anywhere near it. .

The data we rely on for Virtual Sensing is not sensitive, private, or even attributable to any of our data partner’s users. With microwaves, for example, we only look at the signal strength (the energy level of the signal), rather than at the signal itself (data moving across networks). If we think of a cellular network as a highway, and the data passing through it as the cars, then we’re not looking at the cars; we’re just paying attention to how many lanes are open on the road. We’re compliant with GDPR, and have been cleared by regulators in multiple countries to handle these types of data.

In addition to privacy, we’re also maniacally focused on security. We follow strict opsec, infosec, and cyber security standards, that are far out of the league for a company dealing with weather, to ensure that our customers and partners know their data is safe with us. Bottom line, we take privacy and security very seriously.

Ok, we can move on.

The Virtues of Virtual Sensing

At this point you might ask yourself: “Fine, so you have access to all of these new data points. So what? Can you show that you produce better results?” Well, we wouldn’t be here if we couldn’t, right? 🙂

Here’s a first example.

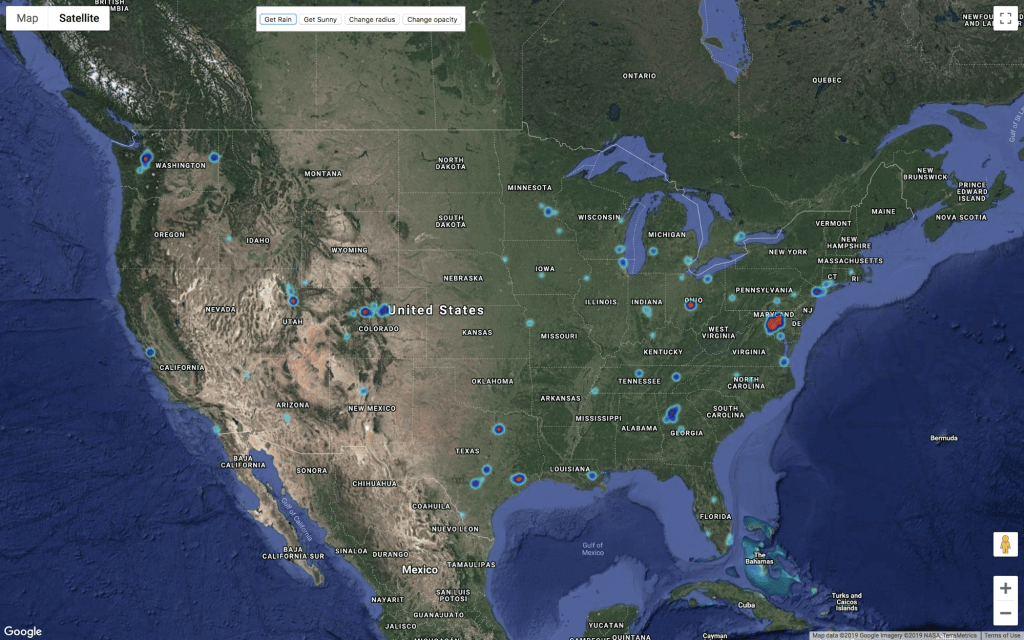

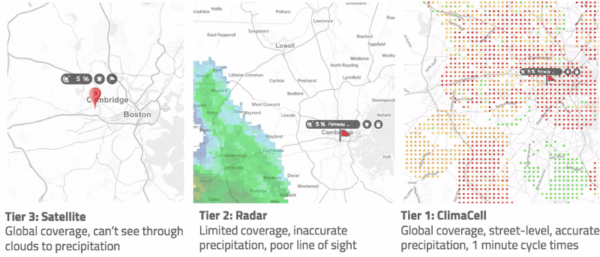

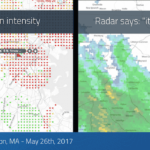

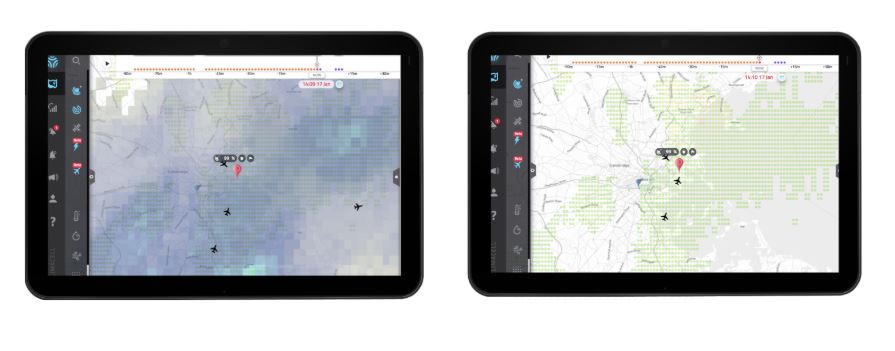

Source: Tomorrow.io

On the left we see a typical rain event over the city of Boston (coincidentally where our headquarters are located), as captured by NEXRAD, the radar system operated by NOAA. On the right is that same event, as captured by our “integrated layer” – the algorithm that fuses data from traditional sources (i.e. radars and rain-gauges), with data from our virtual sensors (i.e. microwaves and others that we’ll discuss shortly).

The radar – the imaging we’re used to – suggests, in a blurry way, that there’s rain all over Boston and the suburbs around it. Our integrated layer corrects that blurry impression and shows exactly where rain falls, and where it doesn’t.

How can virtual sensing be so much more accurate? Radars shoot their beams to the sky, capturing precipitation occurring at several thousand feet above the ground, which in most cases is not exactly representative of what’s going on at the surface level. The microwave links, by contrast, function as point-to-point radars with beams running very close to the surface (rooftop height, where cellular antennas are located), so they capture the precipitation that actually reaches the ground.

Another difference you can notice is the spatial resolution (the size of each pixel). Radar’s resolution is typically around 2 km (it gets a bit better closer to the radar, and worse when it’s further). That’s about the size of a quarter of Boston. When you add hundreds of new sensors (roughly the number of cell towers in a city Boston’s size), you can reconstruct precipitation maps at a much higher resolution. We currently operate a 500m grid, but we can easily tune this even higher for specific use-cases.

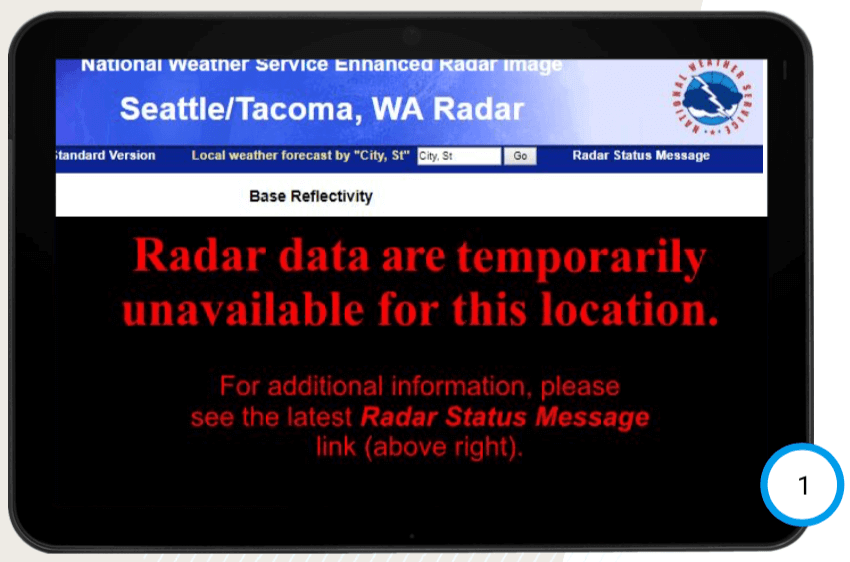

There are many more advantages to adding new sensing points. For example, when you’re counting on just the governmental sources, sometimes you face this situation:

NWS Website (and all other weather companies) Vs. Tomorrow.io:

Sources:NOAA and Tomorrow.io

And the U.S.’s National Weather Service gets a 10/10 in the world league of meteorological agencies. In fact, many radars outside of the developed world spend most of their time non-operational, due mainly to poor maintenance.

WAIT, THERE’S MORE.

Virtual sensing doesn’t end with cell phone towers and satellites. Tomorrow.io, three years in, sees nearly infinite opportunities to create new weather observations. As long as something is affected by the weather, and there’s a reasonable path to get near-real-time data from it, there is no limit to what we can leverage.

Imagine if we had a way to measure how many umbrellas are open in a certain area – wouldn’t this be a clear indication of the fact that it’s raining? We have a bit of a geeky term for it – reversing the arrow of inference.

Here are a couple more examples (the ones we share with the world…).

Cars are magnificent weather sensors, even though not designed for the purpose. Nothing in the world today can detect tiny, local amounts of drizzle (for reasons already discussed, such as radar lacking sufficient sensitivity) – but our eyes can, and our hands activate windshield wipers, which can give us the most accurate rain maps in the world; the ABS system can tell us how slippery a road is; and fog lights are a unique indicator for low visibility.

You’ll notice a pattern here: Tomorrow.io positions people, and human systems from cell phone towers to car safety devices, not just as passive consumers of weather information, but as active producers of it.

To be sure, some of this data is REALLY noisy. Not all drivers respond in the exact same way to changing weather, and so we have do a lot of machine learning tricks to remove outliers and nonsensical data, and convert those observations into meaningful and trustworthy weather data. Once we do, though, we have human activity informing us how the weather affects…human activity. It’s a robust system.

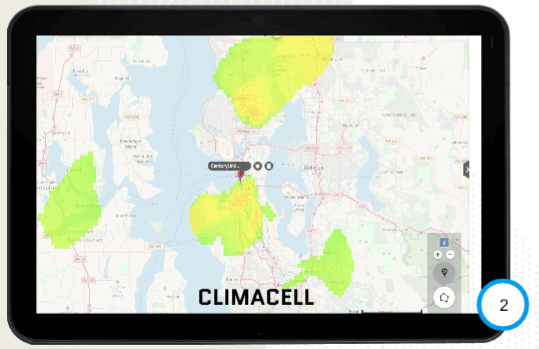

Source: Tomorrow.io

Discrete temperature measurements from over 1M connected vehicles in in the Great Lakes region of the US, revealing micro-scale phenomena such as colder temperature at the coastline of Chicago.

Mobile devices have over a dozen sensors. Using strictly anonymized, device-level (not personal) data, we are able to leverage readings from those sensors to gain unprecedented, “reverse the arrow” weather observations. It’s cold outside so your battery died sooner? Well, if batteries are dying sooner en masse, it may mean it’s cold outside. Barometric pressure sensors were added to cell phones to improve GPS accuracy, but we use them to see micro-scale synoptic maps that reveal hidden features in the atmosphere.

Source: Tomorrow.io

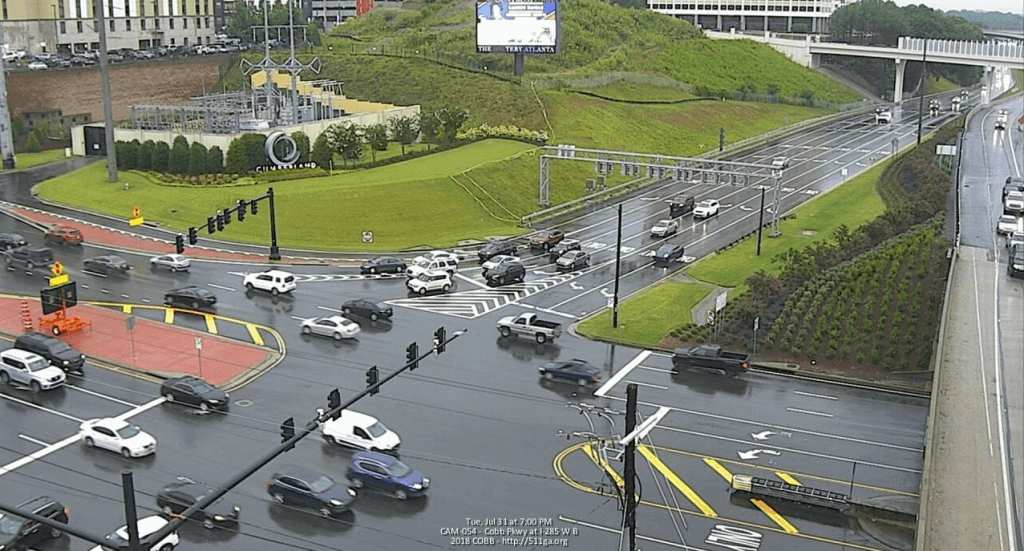

Onwards. Remember the “weather forecast rocks?” Well, road cameras can see rainfall making things wet just as well as we can. Using the latest in image processing and deep learning, we process data from hundreds of thousands of live outdoor feeds, and soon millions of dashcams, to identify weather conditions such as precipitation type and intensity, fog, cloud coverage and more.

Source: Tomorrow.io

A standard classifier may assume it’s not raining in the image above.

But it is – look at the cars’ windshield wipers.

Power Grid. Bzzz. Electricity. Always around us. Millions of electricity poles, high voltage lines, photovoltaic solar panels, wind energy turbines. Each is affected by the weather in different ways – conductivity, heating, power output.

Source: pixabay.com

What’s really important here is that all of these things – power grids, cellular networks, road cameras, connected vehicles, smartphones, etc. – exist in all countries, not just wealthy ones. We can leverage them to close the huge sensing gap that’s impacting millions of people.

That’s only the beginning. We continue to expand our portfolio of Virtual Sensing technologies, and to develop techniques to sense the weather from Electric Bikes and Scooters, Airplanes, and Drones, to name a few. We’ll be announcing more soon.

Our approach – finding things that “speak” weather – generates meaningful, high-quality data from otherwise non-weather data, and is a fundamental paradigm shift in how we and the broader weather industry think about meteorological-grade weather observations.

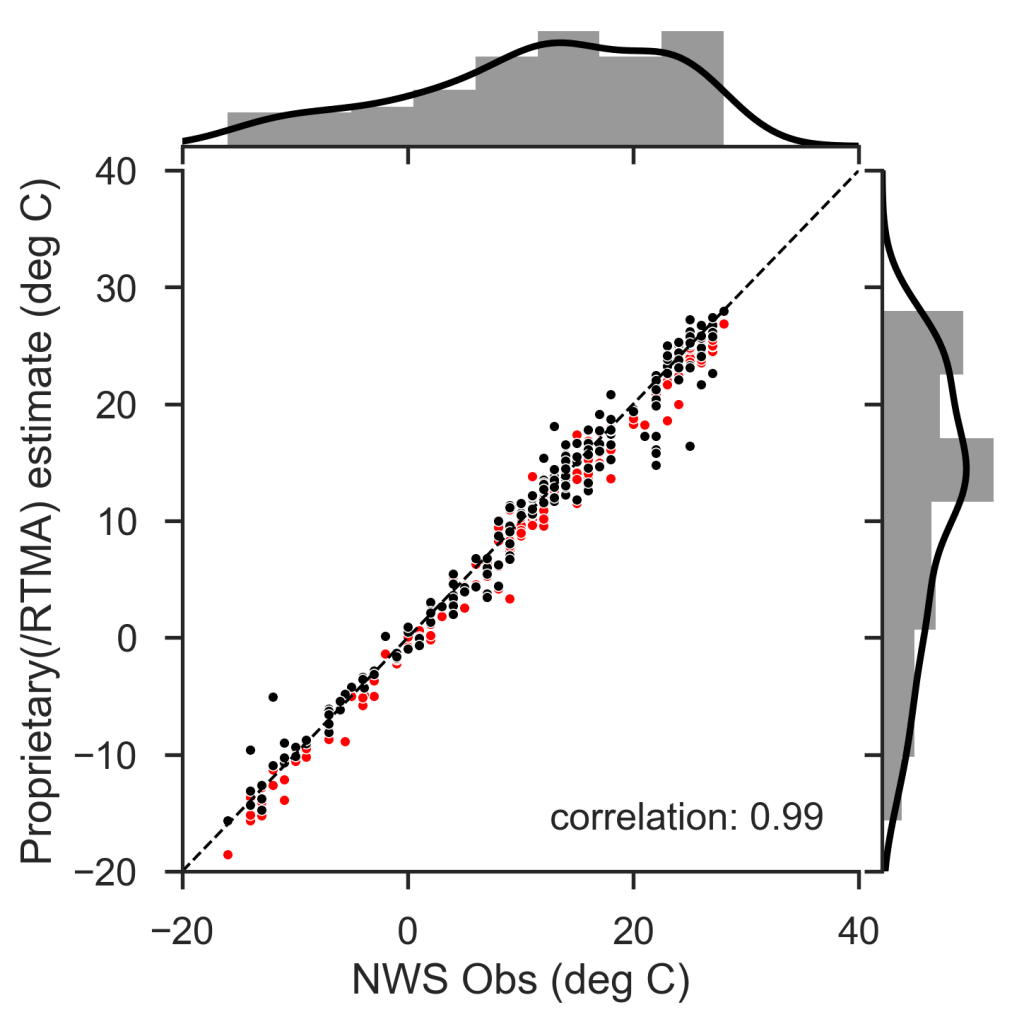

To be clear, our approach flies in the face of 40 years of industry and World Meteorological Organization (WMO) orthodoxy, which up until very recently maintained a strict belief that only “WMO-grade” weather observations are of any value and can be trusted for model initiation or any other analysis. In addition to the path-breaking R&D work we’ve done to extract meaningful weather data from weather sensitive things, we’ve concurrently proven that our proprietary data is correlated to the observed weather from highly calibrated specialized equipment. In other words, our virtual sensors match what the best of the dedicated hardware sensors can show, but there are many more of them, everywhere in the world.

This also means we no longer have to wait for the deployment of WMO certified weather stations in developing countries to make use of our sensed weather data. Recall that map of Mumbai, the one weather station against the thousands of cell tower connections. When the monsoon hits, which can tell you if your neighborhood is at risk of flood?

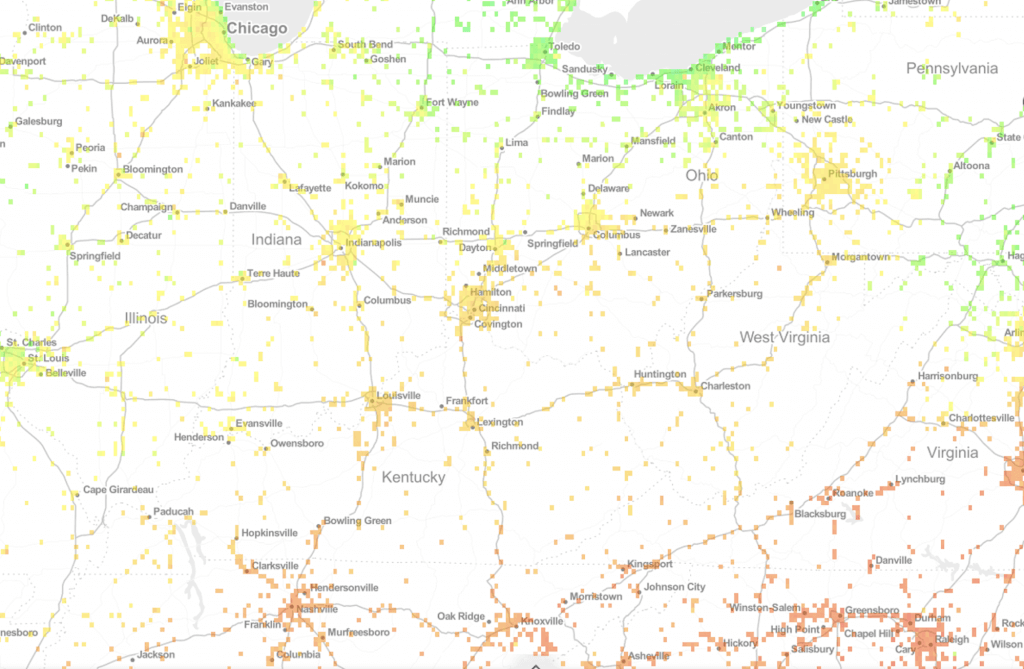

“Virtual” versus Traditional sensors

Correlation between temperature analysis using IoT measurements and traditional NWS observations. Source: Tomorrow.io

No sensor is perfect, of course, including the virtual sensors we develop. Radars and rain gauges each have their limitations, but so do microwaves, cameras and others. Still, a basic principle in signal processing says that the more independent datasets you add, the better the final accuracy you’ll get. We add many, many new datasets.

So, as we continue down the path of Virtual Sensing, our original hypothesis that we can “see” the weather much better if we try a new approach is proving very true. With each day, our algorithms get more effective, our data partnerships expand across the globe, and our ability to sense and make sense of weather from millions of devices continues to evolve. We believe we’re the first sprout of a revolution, and take great pride in it.