Outdoor Events

Fireworks, Forecasts, and the Fourth: How Weather Shapes America’s Biggest Independence Day Celebrations

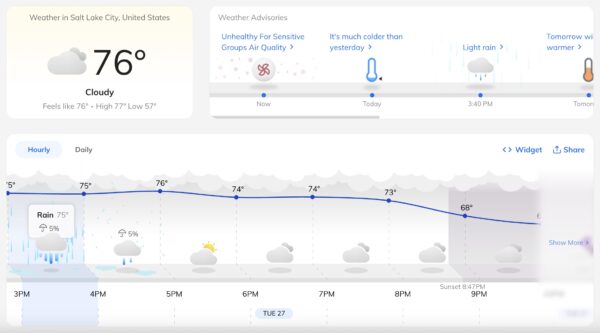

Every July 4th, millions of Americans gather under the night sky to witness dazzling fireworks displays that light up the nation’s skyline. From the iconic Macy’s show in New York City to San Diego’s Big Bay Boom, these events are the culmination of months of meticulous planning. Yet, amidst the choreography of pyrotechnics and patriotic […]

Jul 2, 2025·

4 min