In recent years, there’s been an explosion of companies tapping nontraditional data sources to better understand our world. Orbital Insight uses satellite imagery to analyze everything from Walmart parking lots (to predict back-to-school sales) to construction sites (to predict new home growth). Dataminr sifts through social media platforms to find breaking news and identify trends that impact security, finance and news organizations. Foursquare uses location data to give brands powerful insights into their consumers’ behavior. At Tomorrow.io, we use data from the connected world – signals from wireless towers, connected cars, airplanes, drones and more – as the basis for our weather forecasts.

But while these datasets are capturing the public’s imagination and providing early adopters of these data sets with a clear advantage, the true catalyst for spinning miles of ingeniously derived data into silk often gets overlooked.

Ladies and gentlemen, meet the quiet, brainy models that are disrupting the way multiplying sources of information are analyzed, cross referenced and presented. Why are data models important? A good model can harness chaotic, even overwhelming, data into powerful, actionable insights. Needless to say, an ineffective data model leaves potential pearls of insight buried and doesn’t serve the rapidly evolving needs of organizations.

A Weather Forecast Flop: How Does Slush Become a Snow Day?

Earlier this year, New York Mayor Bill de Blasio was pilloried for unnecessarily closing down the largest school system in the United States. Despite the forecasts of the National Weather Service, which had predicted close to a foot of snow, only five inches actually fell in Central Park. And totals were even lower, closer to two inches, in parts of Brooklyn and Queens.

One of the many tweets following Mayor de Blasio’s snow day decision

Mayor de Blasio had a similar – though opposite – issue a few months earlier. In November 2018, the city was hit by a snowstorm, leaving special needs students stranded on school buses for 10 hours, creating an outcry from parents and others who were critical of the lack of preparedness.

Some of the problem lies in the type of models commonly used in the United States. In comparison, the European forecast model has proven to be significantly more accurate in predicting major storms. But the significance of getting the weather right goes far beyond bragging rights for the European meteorological community. Lives and property can hang in the balance. In 2017, weather disasters cost the U.S. a record-setting $306 billion. And an increase in extreme weather brought on by climate change is only making it more important for weather models to be accurate.

Infobesity? There’s a Model For That…

Once upon a time, data mining was sufficient to handle vast quantities of data, often unstructured. Aggregating data in order to report a result, search for a pattern and find relationships between variables was how analysis was done. The main problem with this approach to modeling is that it’s descriptive since it’s based on past events. Classic data mining can’t predict the impact of a change in a variable.

The good news is that cheaper processing power, cloud computing and a proliferation of low-cost sensors are making it easier than ever to tap into a rapidly multiplying array of data sources. However, a growing problem that industries face is how to make sense of and draw important conclusions from all this new information. Without the right modeling capabilities, information can quickly morph into infobesity – a massive amount of data with little nutritional value…

This is where predictive analytical data models come into play. Predictions are based on historical data and rely on human interaction to query data, validate patterns, create and then test assumptions. What makes predictive analytics revolutionary is that it uses collected data to predict what might happen. The AI system of data modeling takes this one step further, since it can actually learn autonomously.

Models With Brains and Beauty

AI, specifically machine learning, is making it much easier to produce data-driven algorithms that result in more accurate predictions. Incoming data can be leveraged by AI-driven models to predict an outcome while updating these predictions as new data comes in.

These models are beautiful, but they’re also smart. Whip-smart. Integrating AI delivers significant cost, time and process-related savings quickly. AI-driven models improve the bottom line through intelligent automation, labor and capital augmentation and innovation diffusion. By analyzing incidents in real time, AI can provide early warning of potential problems and propose alternative solutions. These benefits mean that AI has the potential to boost profitability an average of 38% by 2035.

Overloaded On Info? Get Lean and Mean

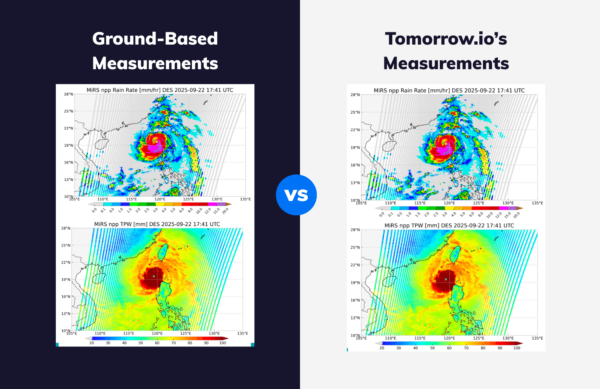

Tomorrow.io is channeling the massive potential of finely tuned data models to boost weather forecast accuracy. Our ingredients include lots of info: everything from data gleaned from wireless towers,connected cars, airplanes, drones and IoT devices, which we call Weather of Things, to the best existing sources of data are thrown into the mix.

But the secret sauce is the complex and highly effective models we use. It’s the models that turn disconnected data sets into powerful stories. We’re able to analyze, in real time, a whole host of relevant weather parameters, as well as the interaction between them. By only focusing on what matters we can quickly understand the potential impact of temperature, humidity, cloud cover, lightning and many other factors. AI-driven models allow us to not only make the most of a vast amount of data sources from all over the world, but also tailor forecasts to a city block, literally.

Sure, emerging data sources are exciting to behold, read about and discuss. But the hard work of turning data into a goldmine of actionable insights is being done by models.

And only the right models will do…